AI Agents, from 0 to 1

There's alot of noise about AI Agents. Let's understand them from first principles.

Introduction

Every week, we’re seeing a flood of AI posts. It can be difficult to even define what an AI agent actually is.

In this article, we’ll explore what the actual components of a basic AI agent are, through 5 levels of increasing capability.

By the end, we’ll have an AI agent that can swap Ethereum (ETH) for dollars (USDC) and vice versa.

You can find the code used for this demo here, and play with it yourself.

Each example contains screenshots from the chat, and an architecture diagram showing the underlying components.

By the end, you should have an understanding of all the basics, and be able to easily create your own AI agents.

In the real world, agents go way beyond level 5. You’ll have agents talking to agents, workflows, human in the loop, observability, QA and other advanced topics. The goal of this article is to demystify the core building block of modern agentic systems, which is the agent itself.

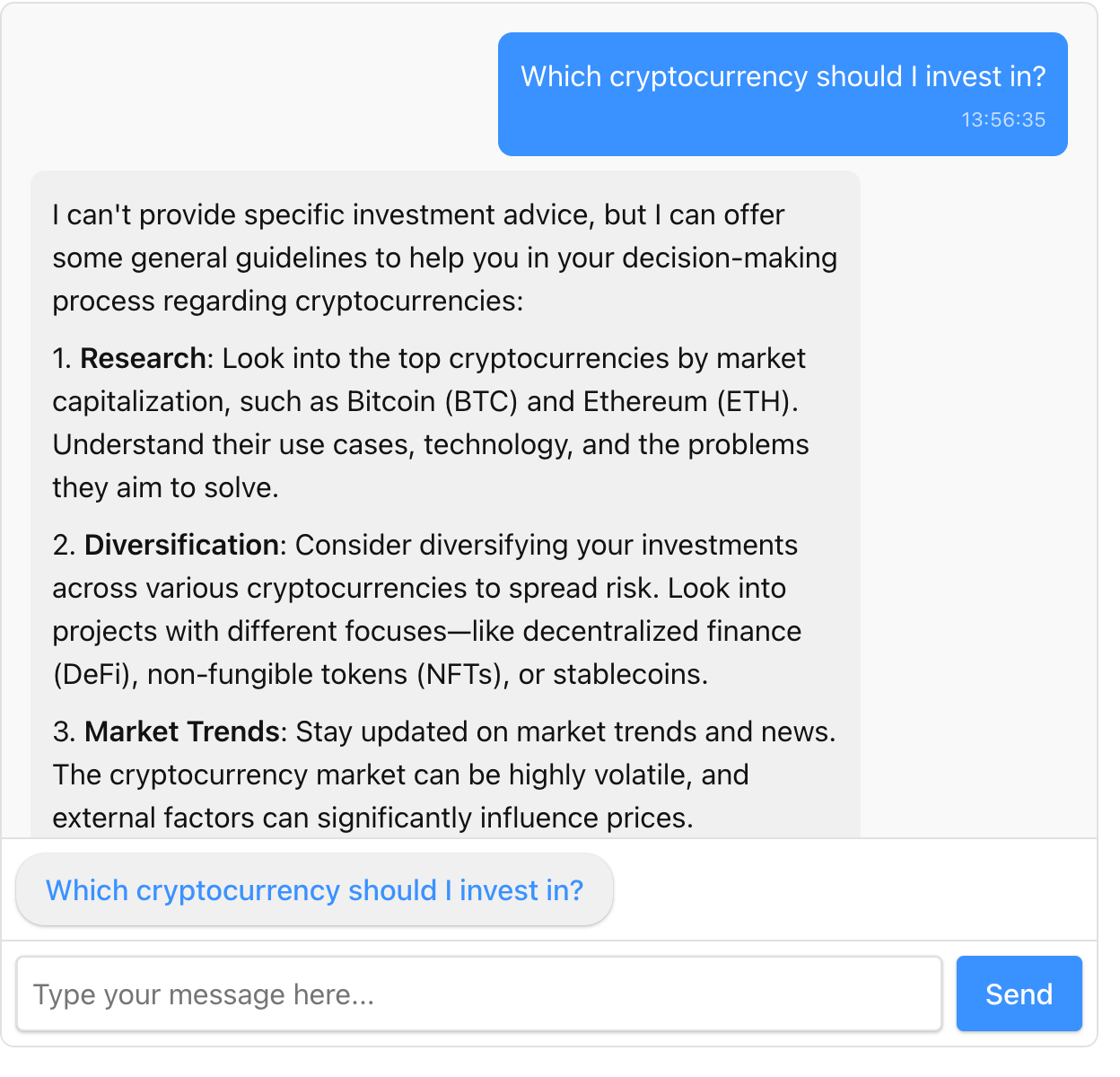

1. Basic LLM – Stateless Prompt/Response

At this level, you’re interacting with a raw large language model (LLM), like GPT-4, in its most basic form. It processes each message independently, with no memory of prior inputs. Every prompt is evaluated in isolation, and the model’s output is purely reactive.

In this example, we ask which crypto currency we should invest in.

This helps for quick generation tasks or question-answering, but it completely falls apart once you need to ask a follow up question.

2. Agent with Context – Adds Short-Term Memory

Here, we introduce short-term context, typically by preserving a limited chat history. This allows the agent to maintain conversational continuity—it remembers what was just said, and adjusts responses accordingly.

The result is more consistent, relevant dialogue. However, without intentional prompts or structure, the agent’s behavior can still drift or become verbose over time.

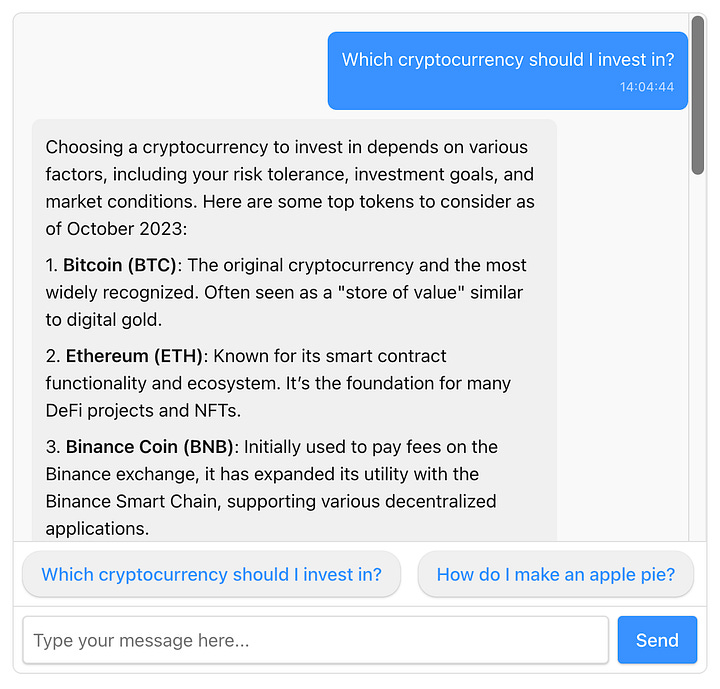

3. Agent with Context and System Prompt – Guided Intent

A system prompt is added—an invisible instruction that tells the agent how to behave, respond, and interpret the user’s intent. This guides the tone, boundaries, and “personality” of the agent.

As you can see, we are now getting more specific recommendations. We are also getting some advice on various investment strategies.

Below is the architecture diagram. We’ve now added in a system prompt.

If the user tries to go in a different direction, the agent can guide them back on track.

Now we have something more coherent and focused. The agent knows its role, retains recent memory, and aligns better with the user’s goals. This is where true persona-based agents begin to emerge.

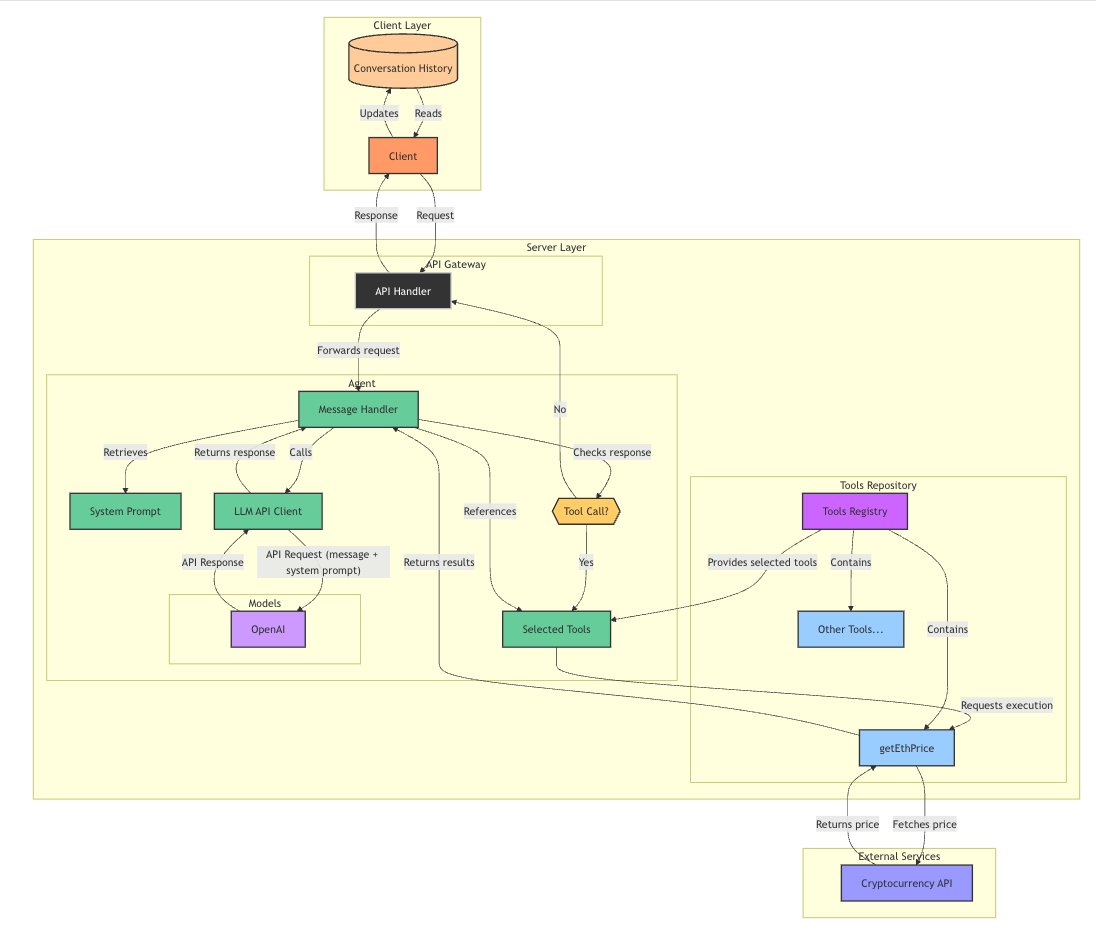

4. Agent with Context and Read-Only Tools – Eyes on the World

The agent gains access to external data sources—for example, fetching live crypto prices or viewing historical price trends. This marks a major leap: the agent can now augment its reasoning with live information.

And here’s the architecture diagram. We’ve now added a repository of tools that can be provided to our agent.

The AI agent sends a request to OpenAI. The model says it should call a tool (getEthPrice). Our tool talks to a cryptocurrency API to get the current Ethereum price.

The agent gets the response, and re-requests OpenAI to generate a human friendly message with the data.

Instead of being a static model, we now have an information-integrated system, capable of contextualizing responses with current market data. The agent has read access, but no ability to act.

5. Agent with Context, Write Access, and Wallet Integration – Acts on the World

At this level, the agent not only reads from external systems—it can take action. With a connected crypto wallet and defined constraints, the agent can now execute trades.

This creates the beginning of a true agentic system: it has a connection with the outside world and can take real actions. At this point, we’re beyond chat and into the realm of embedded intelligence that interacts with real infrastructure.

This is also where we leave vibe-coding land, and start to get into serious software engineering land.

Conclusion

You can think of agents as specialised products that do 1 or a few very closely related things very well. We can then add up multiple agents to achieve larger goals.

In a nutshell, here’s the core components of an agent

- System prompt: What are the agent’s goals and typical tasks? How should the agent respond to users? When should it bring them back on track?

- Model: Are you using a typical model like GPT 4o, Gemini Flash, or maybe a reasoning model like o3 or deepseek R1? Based on the goal of the agent, and the desired result, model is important.

- Context: Apart from the user’s message, are you sending conversation history, or additional system data?

- Tools: Which capabilities should the agent wield? The more tools you add, the more confused your agent will be as to which is the right tool for a task.

Here’s the Github code repo for this demo.

And if you’re looking to build AI agents, reach out to me at the button below.